The predecessor to the World Wide Web was the Gopher protocol and the first search engines I used were Archie, Jughead and Veronica! Information was in files which good-natured people made available, or you got permission and accessed as needed. And even getting nuggets of information using text-based hierarchical systems was exhilarating. Loved it and spent hours with those text-based green screens!

This was 1991. Exciting times!!

Fast forward to 2020, and today we probably need personal search engines for our disorganised file systems spread across multiple devices, including all the images that we click and store.

Well, at least, I do! Extend the information deluge to enterprises, and it is no longer amusing. Forget storage, useful & timely retrieval of information becomes critical. And how does one not just institutionalise knowledge but share knowledge?

Meanwhile, the use of conversational interfaces is now widening from search to service desks to navigate the forests of knowledge and information through enterprise systems.

Traditionally, we have navigated systems through hierarchical structures – login, directories, folders and files. We have continued that journey even into our personal lives. But when we retrieve information from our brains, it does not happen the same way!

Let me illustrate -For example – I remember the year and even the places I listened to, from the songs I heard at that time. It was in January 1990 that I first heard Phil Collins’ Another Day in Paradise’ and it shocked me about the homelessness in the US. And I remember the place, the room, the people that I was with at that exact moment in time. When I see smoke, I am unfortunately reminded of the Twin Towers of the WTC. If I rack my brain to find where my glasses are, I can never remember till I realise that it is literally on my head.

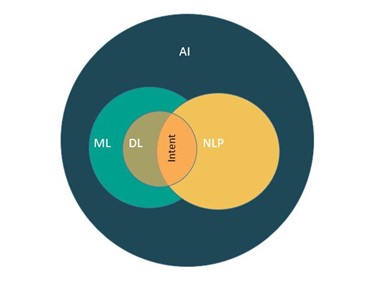

How does one get to intent by only using text? Or understand sarcasm? I recall a hackathon we had entered for a German insurance company. The use case was to have analysed documents which were free from complaints. We got through the conversational bit fine, and then the company threw in a Googly (a cricketing term) – “Can you find sarcasm in the tone of the letters!”. Huh? The irony was bad enough; how can someone grasp German-speaking sarcasm with the language nuances without knowing the language as a native speaker.

I have used Google translate extensively in my itinerant professional life – including getting a haircut in Turkey. My hair stands up like a porcupine’s quills if cut too short. I tried saying do not cut the hair too short and I received a quizzical look until I used the phrase in google translate and then followed up by showing him a picture of a porcupine. Well, I had the best haircut ever in Istanbul!!

Context becomes critical and so do boundaries. Teaching the ‘child-BOT ‘is crucial because it cannot be done without boundaries, and it needs to learn by itself as well. The nuances of the spoken /colloquial language do not help either.

But I strongly believe that more contextual boundaries of use cases are defined, the better the conversational interfaces and bots will be. Marrying these use cases by harnessing the learnings and broadening the boundaries would dramatically enhance the much-needed institutionalising of knowledge and information. And more critically, the customer experience by enhancing the ability to “learn” intent.

context has “understood” the employee and is able to “learn” about intent. And in most cases, most enterprises have an IT Helpdesk BOT and there is already “knowledge” about the employee. Combining the IT Helpdesk BOT + HR + Finance allows the BOT to leverage ML/DL. Possibly that helps us to get to understanding the intent better in the context of the corporate world.

One has nothing but love for those brilliant techies and scientists who brought together AI, Linguistics and Learning! And as we continue our journey into the world of Digital Conversations, the holistic digital experience of harnessing knowledge with intent becomes critical.

P.S: Love you guys for introducing it all!

• The Gopher – thanks to Mark McCahill and his amazing team!

• Archie (Indexing FTP Archives tool and search)- Alan Emtage, Bill Heelan, Peter Deutsch

• Jughead (search engine on Gopher Protocol) – Rhett Jones

• Veronica (search engine on Gopher Protocol) – Steven Foster and Fred Barrie

I can only say as in Bill & Ted’s Excellent Journey, “We are not worthy”!! Thank you!!

is Senior Vice President & Head of UK & Europe at Happiest Minds Technologies. Isaac brings diverse experience ranging from strategy, operations, delivery to building markets in India, UK & Europe. He has over 30 years of experience in the technology industry, of which 20 of which have been in UK & Europe. He has worked with companies such as Fujitsu, Steria (Xansa), TCS, E&Y, and Strong Memorial Hospital.