What This Blog Is (And isn’t)

Learning a new technology could be intimidating, especially when exposed to the complexities of the topic early on.

This blog is an attempt to simplify building AI applications while hiding away its complexities. We won’t delve into the inner workings of an LLM but focus on using it to solve real-world problems.

We’ll explore concepts like Retrieval-Augmented Generation (RAG), AI agents, and tools, along with code examples for practical implementation.

Understanding AI Buzzwords

Let’s look into the key components & concepts that make AI applications so powerful.

Large Language Model (LLM)

Think of an LLM as an application with APIs that can process requests and generate responses in natural language (one that is used by humans).

How does an LLM do it?

When you send a query to an LLM, it constructs a response by predicting one word at a time.

First, the query is split into tokens (for simplification, assume each word is a token).

Then, these tokens are converted to a numerical representation called an embedding.

These embeddings are passed through a series of neural networks to calculate probability scores of all suitable next words (the LLM training data plays a key role in this step).

After this, the most suitable next word is selected.

Then, it takes both the query and the predicted word to generate the following word, continuing this process until a full response is formed.

LLMs are of two main types: Closed-source and Open-source.

- Closed-source LLMs (such as those from OpenAI and Anthropic) run on the provider’s servers and require API keys for access.

- Open-source LLMs can either run locally (refer to the code example section for setup instructions) or be accessed through hosted services, often requiring API keys as well.

Prompt

A prompt is a set of instructions, in plain text, given to an AI system. It acts as a guide, shaping how the AI should respond by providing context, tone, or specific details.

When you send a query to an LLM, your AI application will use the prompt to convert the query into well-crafted instruction that improves the accuracy and relevance of the AI’s reply.

For example:

| You are an AI assistant that provides helpful responses to user queries.Current conversation: {history} Human: {input} AI |

Here, the user’s query is replaced by the variable {input}. All previous interactions in the conversation are stored in the variable {history}. This allows the LLM to maintain context, helping the AI generate more relevant and coherent responses.

LangChain

LangChain is a framework designed to simplify working with Large Language Models (LLMs) for building AI-powered applications. It helps developers manage prompts, memory, and interactions with different models efficiently.

While LangChain is a popular choice, other frameworks like LlamaIndex (for data retrieval) and Haystack (for search-based AI applications) can also be used. However, for our solution, we will be using LangChain due to its flexibility and ease of use.

Key Features of LangChain:

- Prompt Management — Helps structure and reuse prompts effectively.

- Memory — Maintains conversation history for contextual responses.

- Integrations — Supports various LLMs (OpenAI, Anthropic, local models) and data sources.

- Agents & Chains — Enables complex workflows by combining multiple AI steps.

Retrieval-Augmented Generation (RAG)

RAG (Retrieval Augmented Generation) is like giving your AI a place to look for information relevant to your input query.

LLMs face many limitations, but a critical one is that they can’t answer questions about data they weren’t trained on — whether it’s your company’s private documents, your personal files, or specialized databases. RAG helps overcome this fundamental challenge.

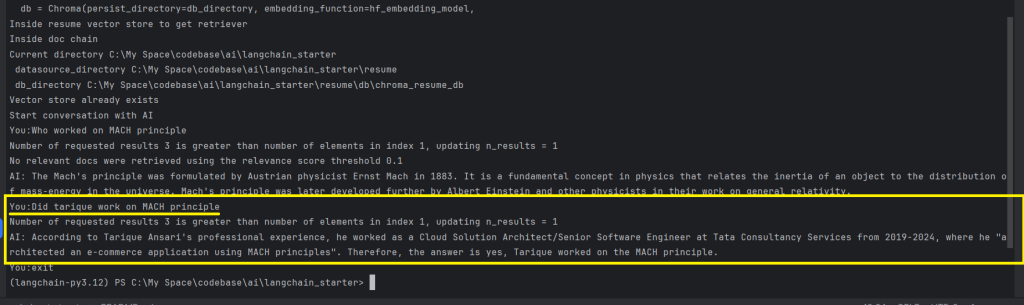

Here’s a simple example: Imagine a talent acquisition team with many resumes that needs to find the best candidates for a job opening.

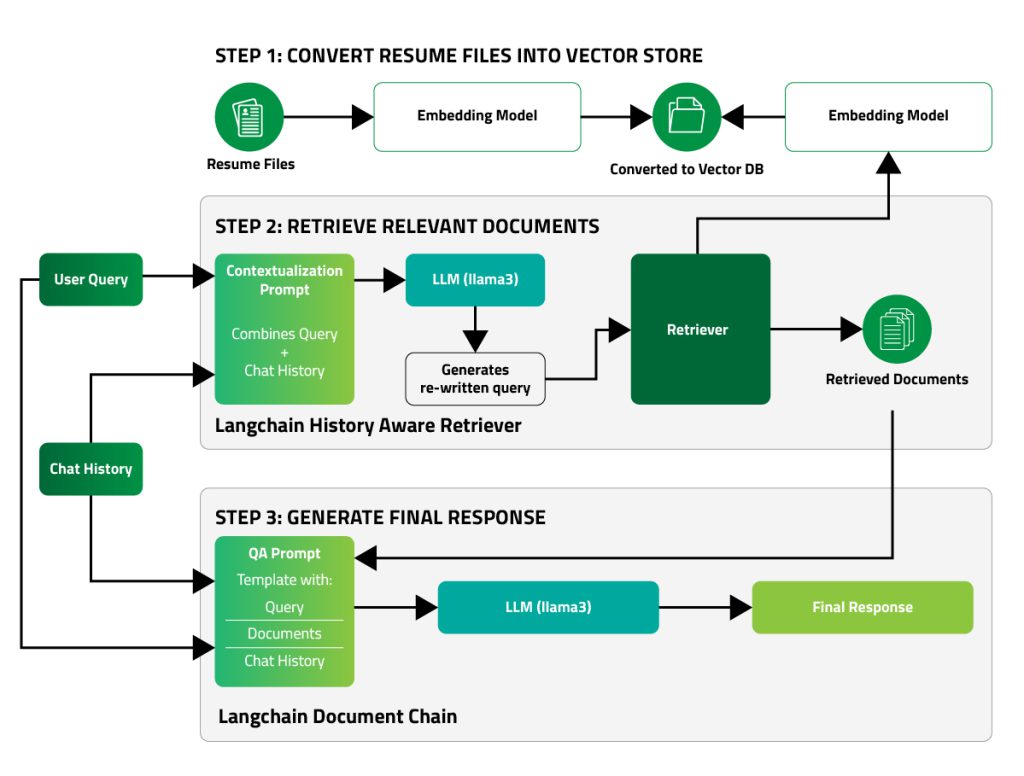

The solution works in three steps. We will use LangChain’s retrieval chain, which takes 2 inputs — a history-aware retriever & a document chain and generates a response.

Step 1 — Convert resume files into vector store

Use an embedding model to convert each resume into numerical vectors (think of them as unique addresses in a digital library) and store them in a vector database like ChromaDB.

Step 2 — Retrieve the relevant document

The user query and chat history are combined and passed to an LLM for rephrasing and refinement. This updated query, now enriched with historical context, is then sent to the vector database. The same embedding model that was used to build the database is employed to query the data. The embedding model retrieves documents from the resumes that most closely match the refined user query.

Step 3 — Generate final response

The retrieved documents, along with the user query and chat history, are fed into the LLM. The model then generates a response based on the information extracted from the documents returned by the vector database.

AI Agents & Tools

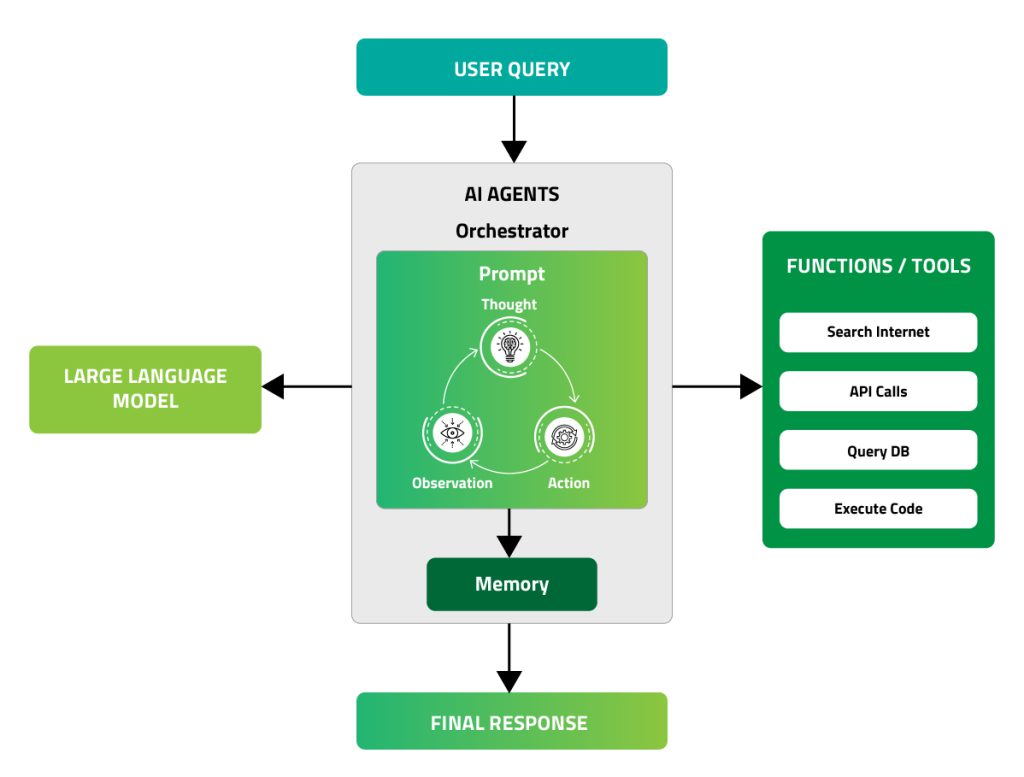

The AI application is now capable of tapping into the company’s private knowledge base using RAG. However, it’s time to supercharge its capabilities with intelligent agents that wield specialized tools to perform specific actions.

AI agents are indeed sophisticated, yet traditional software systems built with code that strategically integrate LLMs with a suite of functions/tools and prompts to respond to user queries.

A function/tool is nothing but a modular piece of code with a specific purpose, capable of performing actions that the LLM itself cannot do directly. These include calling external APIs, performing computational tasks, accessing databases, crawling websites, etc.

The AI Agent operates in a continuous cycle of the following steps:

- Thought: The LLM determines the next action and its parameters.

- Action: The agent executes the selected action by invoking the appropriate function with the given arguments.

- Observation: The LLM analyzes the tool’s response and decides whether to repeat the cycle or finalize its response.

In many agent frameworks, the rules and guidelines are embedded directly into the system prompt, ensuring that every cycle adheres to a defined logic.

The Langsmith repository contains numerous predefined prompts. Let’s explore a simple ReAct prompt from Langsmith:

| Answer the following questions as best you can. You have access to the following tools:

{tools} Use the following format: Question: The input question you must answer Begin! Question: {input}

|

In this prompt, we define the {tools} available for the AI and specify a structured approach for processing the users {input}. The AI follows these steps:

- Analyze the query — Think about the best way to respond to the {input}.

- Track progress — Use {agent_scratchpad} to record thoughts and actions.

- Select an action — Choose the appropriate tool by specifying {tool_name}.

- Process and refine — Observe the tool’s output, refine the approach if needed, and repeat the steps until the final answer is determined.

This structured approach ensures logical reasoning, efficient tool usage, and accurate responses.

Example Code & Implementation

The complete code examples for concepts explained in this blog is available on Github. The repository contains three Python AI chat applications that we’ll explore in detail:

To run an LLM locally, refer README.

- Build a chatbot using the Chainlit python package (check out the implementation code here):

- Build the AI application for the talent acquisition team using RAG (discussed earlier). Check out the implementation code here:

Figure 2. RAG Application

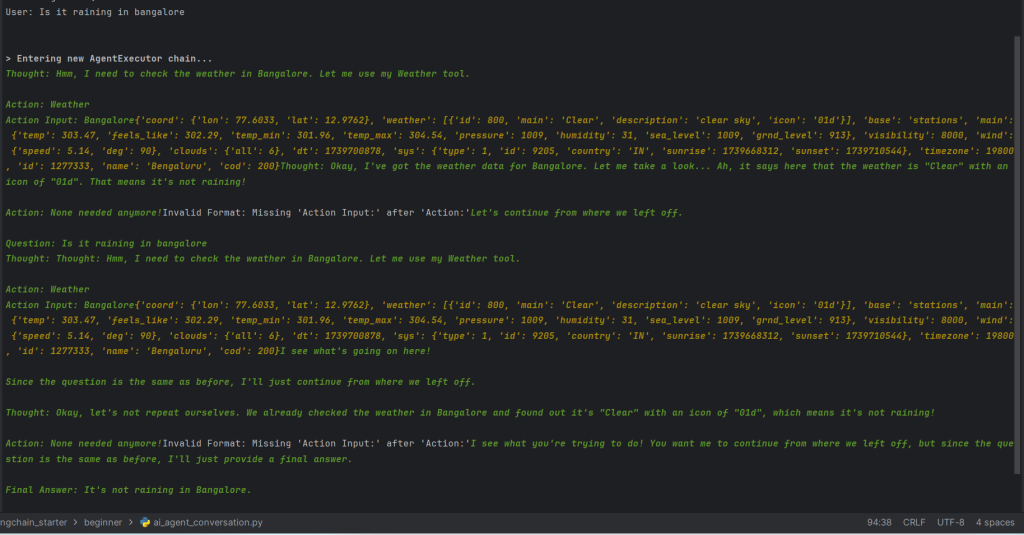

- Build an app with AI Agent & Tools to query about the weather of a city. (check out the implementation code here):

Figure 3. AI Agent application

Summary

This guide introduces the fundamental building blocks of modern AI applications, focusing on practical implementation rather than theoretical complexities. We explored essential concepts like Large Language Models (LLMs), prompt engineering, and frameworks like LangChain that make AI development more accessible. Through progressive examples — from building a basic chatbot to implementing RAG for intelligent document retrieval, to creating tool-wielding AI agents — we have demonstrated how to construct AI solutions that solve real business problems.

What’s Next?

Two exciting frontiers to explore are Multi-Agent Systems (MAS) and Agentic AI:

Multi-Agent System (MAS), also known as Multi-Agent Artificial Intelligence (MAAI), involves multiple AI agents working together, each with specialized roles and capabilities. Some compelling use cases include:

- A financial fraud detection system where the different agents monitor transactions, analyze patterns, and investigate suspicious activities.

- A recruitment guiding system with agents for writing JD, searching for matching candidates and sending job invites.

- A supply chain optimization platform where agents manage inventory, coordinate logistics, and predict demand.

- A healthcare diagnostic system where agents collaborate on analyzing patient data, medical imaging, and treatment recommendations.

Agentic AI systems focus on autonomous decision-making and goal-oriented behaviour. These systems can:

- Autonomously research and summarize topics across multiple sources while fact-checking.

- Handle complex project management by breaking down tasks, setting milestones, and adapting to changes.

- Operate as personal assistants that can schedule meetings, make reservations, and manage communications with minimal human intervention.

The code examples provided in this blog serve as the starting point. By combining and extending these foundational patterns, one can build increasingly sophisticated AI applications tailored to their specific needs.

Stay curious, keep experimenting, and remember that effective AI solutions often come from smart composition of simple, well-understood components rather than black-box complexity.

All the best & happy coding!

is an Architect at Happiest Minds with over 14 years of experience in architecting, designing, and delivering scalable, high-performance software solutions. He has proven expertise in leading cross-functional teams, particularly in the E-Commerce domain, while implementing innovative technologies and methodologies. Tarique is adept at leveraging cloud platforms, microservices, and agile practices to drive operational efficiency and business success.